The Algorithm: How AI Can Hijack Your Career And Steal Your Future

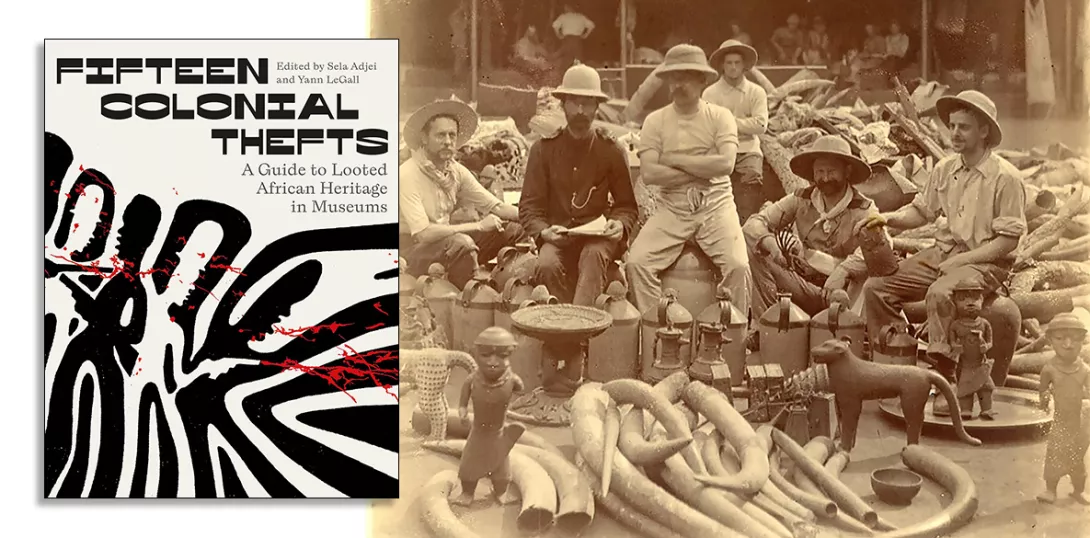

Hilke Schellmann, Hurst, £22

A FRIEND of mine once wrote a sketch about a management training guru, who claimed to have invented a tool that can tell “whether you’re a blue, a green, a red or... a dickhead.” Compared to the products and procedures documented in Hilke Schellmann’s The Algorithm: How AI can Hijack Your Career and Steal Your Future, the dickhead test is positively scientific.

[[{"fid":"63495","view_mode":"inlineright","fields":{"format":"inlineright","field_file_image_alt_text[und][0][value]":false,"field_file_image_title_text[und][0][value]":false},"link_text":null,"type":"media","field_deltas":{"1":{"format":"inlineright","field_file_image_alt_text[und][0][value]":false,"field_file_image_title_text[und][0][value]":false}},"attributes":{"class":"media-element file-inlineright","data-delta":"1"}}]]