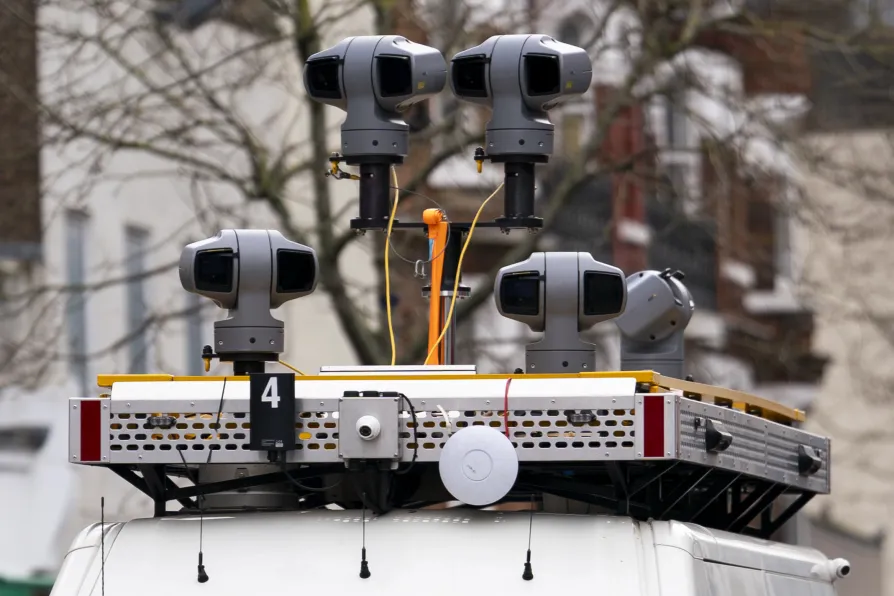

The Metropolitan Police deploying the use of live facial recognition technology in Croydon, south London

The Metropolitan Police deploying the use of live facial recognition technology in Croydon, south London

CAMPAIGNERS sounded the alarm today over the Metropolitan Police’s plans to ramp up its use of invasive and unregulated live facial recognition (LFR) technology.

The force is set to double its use of LFR while it looks to slim down its workforce and boost protest surveillance.

The Met plans to cut 1,400 officers and 300 staff as part of a restructuring effort to address a £260 million budget shortfall.

Despite the cuts, the public order crime team, which investigates protest-related disorder, is set to grow from 48 to 63 officers.

Neighbourhood teams in crime “hotspots” will also be bolstered by an additional 170 officers.

Under the restructuring, LFR will be used up to 10 times per week across five days, up from four times across two days.

Sara Chitseko, of Open Rights Group, warned that the technology is “invasive, harmful and should not be used, let alone expanded, without parliamentary scrutiny or public debate.

“The government should halt the expansion of facial recognition, before we sleepwalk into a surveillance state.”

There is no legislation dedicated to police use of LFR, instead its governance is fragmented under decades-old policing laws, such as the Police and Criminal Evidence Act 1984, as well as data and human rights legislation.

Madeleine Stone, Senior Advocacy Officer at Big Brother Watch, said: “Facial recognition technology remains dangerously unregulated in the UK, meaning police forces are writing their own rules about how they use the technology and who they place on watchlists.

“This is an authoritarian technology that can have life-changing consequences when it makes mistakes, yet neither the public nor Parliament has ever voted on it.”

Big Brother Watch research found that over 89 per cent of live facial recognition alerts have wrongly identified members of the public as people of interest.

Anti-knife crime community worker Shaun Thompson is currently taking legal action against the Met after the technology wrongly flagged him as a criminal.

Met Chief Sir Mark Rowley insisted that the technology is responsibly used, and that the force is deploying it “to look for serious offenders like wanted offenders and registered sex offenders.

“We routinely put it out there and capture multiple serious offenders in one go, many of whom have committed serious offences against women or children, or people who are wanted for armed robbery.”