TEN months ago, in April 2023, Google discontinued a device that it had worked on and publicised for 10 years. Google Glass looked like a pair of clear wrap-around glasses with a chunky bit at the top of the frame. The “chunky bit” was a tiny projector which projected onto part of the glasses themselves so that the user could see a screen in their field of vision. In September Google stopped updating or maintaining the Google Glass products that it had already sold.

What happened? Although the product had been through significant development since the original headset design, it was clearly not a moneymaker. The project started off at “X Development” (not associated with Elon Musk, but at the Alphabet subsidiary that Google uses for development projects).

The company trialled the product on a range of test users in an attempt to make the Google Glass technology seem useful and not creepy; for example, publicising its use by doctors to get a “surgeon’s eye view,” and also by a group of breastfeeding mothers who could apparently use the device to talk to experts and read advice while breastfeeding their babies.

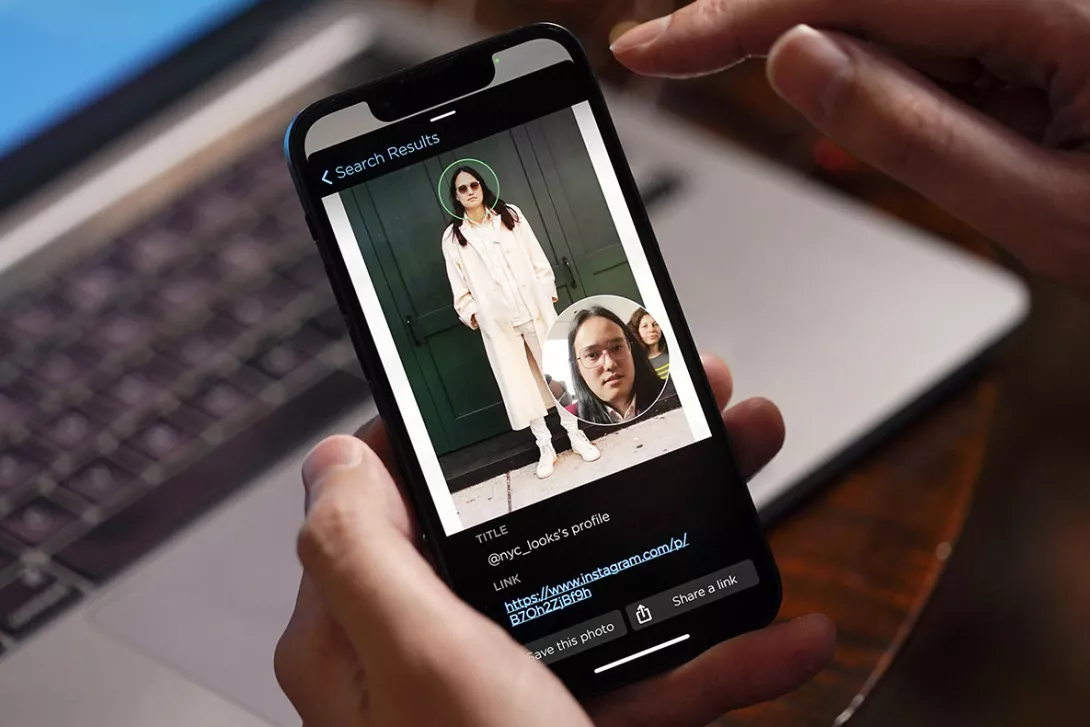

Despite these attempts, the device caused significant backlash, often because of the normalisation of covert surveillance by the user. Perhaps one of the creepiest things about the glasses was the capacity for the user to capture photos or video secretly through eye movement-controlled commands, without the people around them knowing they were doing it. The idea that the device allowed for secret surveillance made much publicity around the gadget highly negative — potentially the reason why it was recently dropped by Google.

The rejection of the device is, at best, the tiniest of setbacks for surveillance culture. The headline in surveillance used to be the number of CCTV cameras per thousand people in the population (London is often cited as having one of the highest number of cameras per inhabitants in the world, along with Indian and Chinese cities).

The number of CCTV cameras is still growing at a vast rate, especially with its widespread adoption by individuals; companies that project market size predict the value of the market doubling over the next fours years. However, CCTV is just one part of the surveillance ecosystem now.

Another component is internet data, which, gathered in ever increasing volumes, held by companies with the economic and political power larger than many states and sold to whoever pays (you too can buy some of this data if you have the money for it).

One of the largest sources of data is that which is freely given away by people that use social media, whether traditional social media or apps linked to activities like looking for a home, listening to music, ordering food or transferring money. This is partly data made public consciously: for example Venmo, a popular money transfer app in the US, shares by default with friends all the transactions an individual makes. It’s also the vast quantity of data the companies themselves have access to. The current estimate for Google searches is 5.9 million searches worldwide every minute, for example.

Although legislation like the EU’s General Data Protection Regulation (GDPR) was an attempt to push back against the incredible rise in unregulated data use, it hardly caused a shift in the pattern of behaviour. The introduction of GDPR saw a reduction in profits for smaller firms, but no effect on data giants like Facebook and Google.

GDPR (including the British version) is also supposed to protect us from entirely algorithmic decisions. Article 22 of the guidance legislation stops entirely automated decisions “that have legal or similarly significant effects on them” — except if the right was signed away in agreeing to terms. It will surprise no-one to learn that studies show people do not read terms of service (GameStation even put a joke clause in its terms in 2010 that made users agree to surrender their souls to the company, which 7,500 customers agreed to).

Surveillance and data-gathering goes hand in hand with automation, because data at this scale can’t be analysed by people alone. The increased use of automated decisions has produced what commentators have called an “algorithmically infused society.” It is this effect that explains the prosecution of 700 subpostmasters in the Horizon scandal, in which a private company, Fujitsu, was contracted by the Post Office to implement algorithmic control of the accounts of individual post office branches.

The accounting software was faulty, a fact that was covered up and led to the life-ruining maltreatment of hundreds of people by their employer. However, these victims were dragged through human courts. It was not the case that there was no human involvement — it was up to humans to decide to interpret the algorithm; they decided it was right.

It is only on rare occasions like this, or the grades algorithm that allocated societally mandated bad grades to working-class children during the pandemic, that the full force of what it means to live in an algorithmic society is made obvious. Most of the time, the conservatism of automation is almost entirely hidden.

The terrors of surveillance and control are nowhere more acutely obvious than in war. The Israeli occupation of Palestinian Territories is well known for its use of surveillance as a tool of war.

The Israeli firm that makes Pegasus, spyware that is used to hack phones, was sanctioned by the US because of its use against human rights defenders. Pegasus is used extensively against Palestinians.

In an operation called “Blue Wolf,” IDF soldiers were reported to record without consent the faces and identities of Palestinians. Surveillance camera data is used with facial recognition algorithms to track the population. Israel is far from alone, considering the vast resources employed in surveillance by the US and British governments (see “Project Tempora”), against people inside and outside the country.

Other occupying states also use surveillance as violence. The Indian government in Jammu and Kashmir, since the revocation of autonomous status in 2019, has subjected their populations to repeated internet blackouts, mass surveillance by digital IDs, targeted use of Pegasus, and the censoring and deleting of social media accounts. Kashmiri shopkeepers are mandated to keep CCTV records and prosecuted by the Indian government for not complying. This didn’t start in 2019. Mass-surveillance has always been a tool of colonialism and its development into digital surveillance has been in lock-step with digital advances. It was in 2012 that the first internet lockdown in Kashmir was implemented, and since then that the CCTV laws have come into place.

Google Glass was rejected because it seemed “a bit creepy,” the presence of surveillance capitalism loomed between the user and the people they spoke to. Or perhaps it seemed like too active an embrace of the insidious data collection that’s enforced in every interaction in society now.